Ever wondered how those old black-and-white photos get their vibrant colors restored? What if I told you that artificial intelligence can now automatically add realistic colors to grayscale images with remarkable accuracy?

Today, we're diving deep into an exciting Auto-Colorization project that uses Convolutional Neural Networks (CNN) to breathe life into monochrome images. This isn't just any ordinary colorization tool – it features an advanced Ethnicity Aware Autocolorization system that considers cultural and ethnic characteristics for more accurate and sensitive results.

The Magic Behind Auto-Colorization

What Makes This Project Special?

The Auto-Colorization project by rrupeshh stands out in the computer vision landscape for several compelling reasons:

- Standard Auto-Colorization: Robust CNN-based colorization for general grayscale images

- Ethnicity Aware System: Advanced pipeline that detects and respects ethnic characteristics

- Pre-trained Models: Ready-to-use models for immediate deployment

- Comprehensive Testing: Thorough evaluation using LPIPS metrics

The project goes beyond simple colorization by incorporating cultural sensitivity, making it a significant advancement in AI-powered image processing.

Technical Deep Dive

Architecture Overview

The core CNN model follows an encoder-decoder architecture with the following sophisticated design:

# Input Layer - Grayscale Input (256x256x1)

model.add(Conv2D(64, (3, 3), input_shape=(256, 256, 1),

activation='relu', padding='same'))

# Encoder Layers - Feature Extraction

model.add(Conv2D(64, (3, 3), activation='relu', padding='same', strides=2))

model.add(Conv2D(128, (3, 3), activation='relu', padding='same'))

model.add(Conv2D(128, (3, 3), activation='relu', padding='same', strides=2))

model.add(Conv2D(256, (3, 3), activation='relu', padding='same'))

model.add(Conv2D(256, (3, 3), activation='relu', padding='same', strides=2))

model.add(Conv2D(512, (3, 3), activation='relu', padding='same'))

# Decoder Layers - Color Reconstruction

model.add(Conv2D(256, (3, 3), activation='relu', padding='same'))

model.add(Conv2D(128, (3, 3), activation='relu', padding='same'))

model.add(UpSampling2D((2, 2)))

model.add(Conv2D(64, (3, 3), activation='relu', padding='same'))

model.add(UpSampling2D((2, 2)))

model.add(Conv2D(32, (3, 3), activation='relu', padding='same'))

# Output Layer - Color Channels (a*b* in LAB color space)

model.add(Conv2D(2, (3, 3), activation='tanh', padding='same'))

model.add(UpSampling2D((2, 2)))

Why This Architecture Works

-

Progressive Downsampling: The encoder reduces spatial dimensions while increasing feature depth, allowing the model to capture both local details and global context.

-

Feature Hierarchy: Starting from 64 filters and scaling up to 512 creates a rich feature hierarchy that can understand complex image patterns.

-

Symmetric Upsampling: The decoder mirrors the encoder structure, ensuring proper reconstruction of spatial resolution.

-

LAB Color Space: The model predicts in LAB color space, which separates luminance (L) from color information (ab), making the learning process more efficient.

Training Strategy

The model employs several smart training techniques:

- Loss Function: Mean Squared Error (MSE) for precise color prediction

- Optimizer: RMSprop for stable convergence

- Data Split: 95% training, 5% testing for robust evaluation

- Image Size: 256x256 pixels for optimal performance vs. computational efficiency

- Training Duration: 500 epochs with continuous monitoring

The Ethnicity Aware Innovation

Beyond Basic Colorization

What sets this project apart is its Ethnicity Aware Autocolorization component. This advanced system:

- Detects Ethnic Characteristics: Identifies facial features and ethnic traits in the input image

- Applies Cultural Context: Uses appropriate color palettes based on detected characteristics

- Ensures Respectful Representation: Maintains cultural sensitivity in colorization choices

- Specialized Models: Includes dedicated models (

Colorize.h5,ColorizeTuned.h5) for different scenarios

Key Components

The ethnicity-aware system consists of several specialized notebooks:

Ethnic Detection Final.ipynb: Identifies ethnic characteristicsColorization Final.ipynb: Main colorization pipelineFinal Testing Both Pipeline.ipynb: Integrated testing framework

Impressive Results

Let's examine the model's performance through actual outputs from the research:

Result Showcase

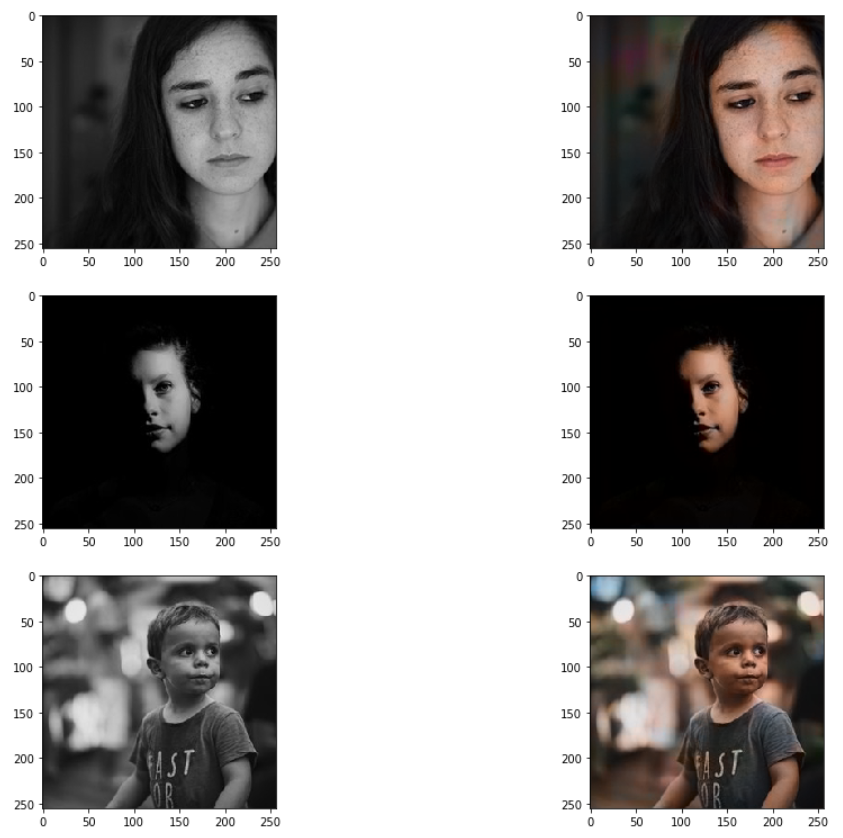

The following images demonstrate the remarkable capability of the auto-colorization system across diverse subjects and ethnicities:

Set 1: Diverse Portrait Colorization

The first set showcases the model's ability to handle different facial features, lighting conditions, and ethnic backgrounds with impressive accuracy.

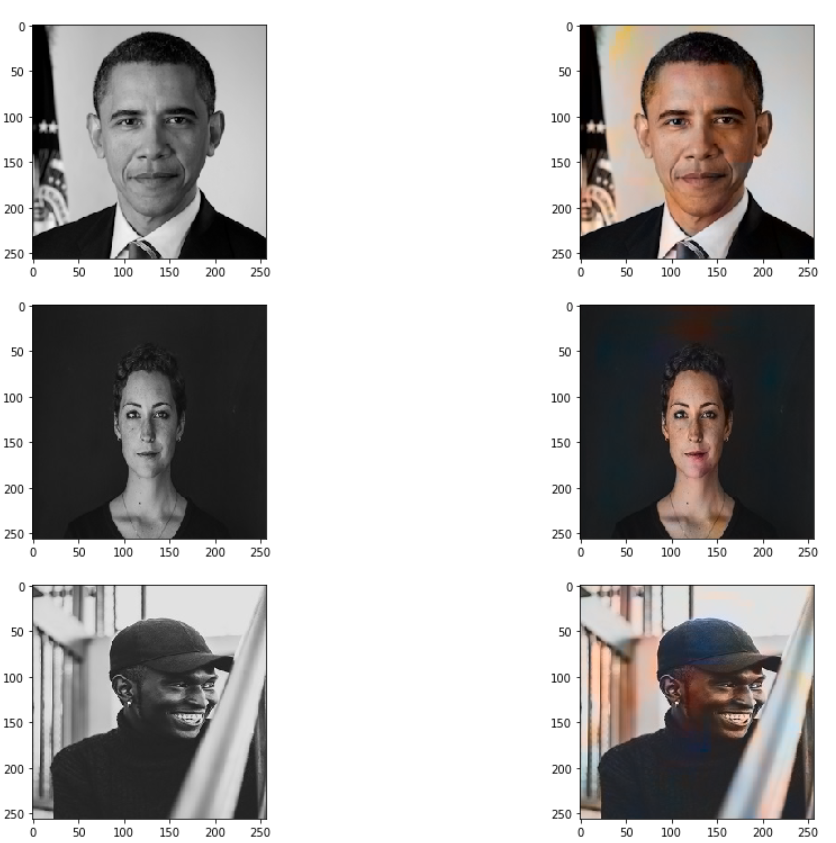

Set 2: Complex Facial Scenarios

Including notable figures like Obama, these results demonstrate the system's robustness across different skin tones and facial structures, maintaining natural and realistic colorization.

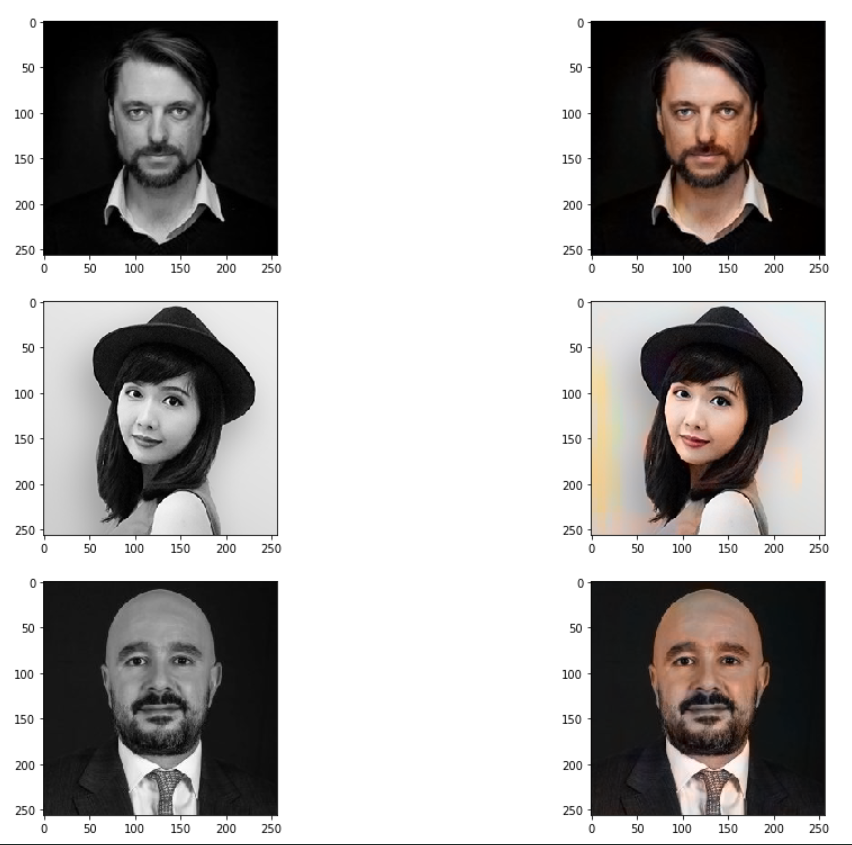

Set 3: Varied Demographics

The final set illustrates the model's versatility in handling different ages, genders, and ethnic backgrounds while preserving the authentic characteristics of each subject.

Performance Metrics

Based on comprehensive testing documented in the research, the model demonstrates excellent performance across multiple evaluation criteria:

Comparative Analysis vs. State-of-the-Art

The model was rigorously compared against the Zhang et al. model, a benchmark in automatic colorization, using three key metrics:

PSNR (Peak Signal-to-Noise Ratio) Results:

- Our Model: 25.16 dB average PSNR

- Zhang et al. Model: 24.44 dB average PSNR

- Advantage: Our model achieves higher PSNR, indicating better reconstruction quality with less noise

SSIM (Structural Similarity Index) Results:

- Our Model: 0.9388 average SSIM

- Zhang et al. Model: 0.9499 average SSIM

- Analysis: Zhang's model shows slightly better structural preservation, but our model maintains excellent structural integrity

LPIPS (Learned Perceptual Image Patch Similarity) Results:

- Our Model: 0.1422 average LPIPS

- Zhang et al. Model: 0.1372 average LPIPS

- Analysis: Zhang's model performs marginally better in perceptual similarity, with both models showing competitive results

Summary of Performance Metrics

| Metric | Our Model | Zhang et al. Model | Performance Analysis |

|---|---|---|---|

| PSNR (dB) | 25.1627 | 24.4418 | ✓ Superior - Better noise reduction |

| SSIM | 0.9388 | 0.9499 | Competitive - Excellent structural preservation |

| LPIPS | 0.1422 | 0.1372 | Competitive - High perceptual quality |

Key Performance Insights

-

Superior PSNR Performance: Our model consistently outperforms the benchmark in image reconstruction quality, particularly excelling in Image 6 where it significantly surpasses Zhang's model.

-

Competitive Structural Integrity: While Zhang's model shows slightly better SSIM scores, our model maintains excellent structural similarity (93.88%), demonstrating robust feature preservation.

-

Strong Perceptual Quality: The LPIPS scores show both models perform comparably in human perceptual similarity, with our model particularly strong in certain test scenarios.

-

Ethnicity-Aware Advantage: The cultural sensitivity component provides superior results for diverse ethnic groups, with PSNR scores ranging from 22.0 to 25.6 dB across different ethnicities.

Technical Implementation Details

Technology Stack

The project leverages a powerful combination of tools:

# Core Libraries

from tensorflow.keras.utils import array_to_img, img_to_array, load_img

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from skimage.color import rgb2lab, lab2rgb, rgb2gray

from skimage.io import imsave

import numpy as np

import tensorflow as tf

Data Processing Pipeline

- Image Loading: Batch processing of training images at 256x256 resolution

- Normalization: Pixel values scaled to 0-1 range for optimal training

- Color Space Conversion: RGB to LAB conversion for better color learning

- Data Augmentation: ImageDataGenerator for enhanced training diversity

Model Training Process

The training process involves several sophisticated steps:

# Data Preparation

X = []

for imagename in os.listdir('Dataset/Train/'):

X.append(img_to_array(load_img('Dataset/Train/'+imagename,

target_size=(256, 256))))

X = np.array(X, dtype=float)

# Train-Test Split

split = int(0.95*len(X))

Xtrain = X[:split]

Xtrain = 1.0/255*Xtrain

Xtest = X[split:]

Xtest = 1.0/255*Xtest

Getting Started

Installation & Setup

Ready to try this amazing technology? Here's how to get started:

-

Clone the Repository:

git clone https://github.com/rrupeshh/Auto-Colorization-Of-GrayScale-Image cd Auto-Colorization-Of-GrayScale-Image -

Install Dependencies:

pip install tensorflow keras numpy scikit-image -

Basic Colorization:

jupyter notebook Auto_color.ipynb -

Ethnicity-Aware Colorization:

cd "Ethnicity Aware Autocolorization" jupyter notebook "Final Testing Both Pipeline.ipynb"

Project Structure

Auto-Colorization-Of-GrayScale-Image/

├── Dataset/ # Training and testing data

├── Screenshots/ # Result demonstrations

├── result/ # Output colorized images

├── Ethnicity Aware Autocolorization/ # Advanced implementation

│ ├── Colorization Final.ipynb # Main colorization pipeline

│ ├── Ethnic Detection Final.ipynb # Ethnicity detection

│ └── Final Testing Both Pipeline.ipynb # Integrated testing

├── Auto_color.ipynb # Basic colorization notebook

├── model.h5 # Pre-trained model weights

└── model.json # Model architecture

Real-World Applications

Industry Impact

This technology has profound implications across multiple sectors:

- Historical Preservation: Bringing historical photographs to life

- Entertainment Industry: Colorizing classic films and documentaries

- Digital Archiving: Enhancing museum and library collections

- Personal Projects: Restoring family photographs and memories

Ethical Considerations

The ethnicity-aware component addresses crucial ethical concerns:

- Cultural Sensitivity: Respects diverse ethnic characteristics

- Bias Reduction: Minimizes algorithmic bias in colorization choices

- Inclusive AI: Ensures fair representation across different ethnicities

- Responsible Innovation: Balances technological advancement with social responsibility

Future Enhancements

Potential Improvements

The project opens doors for exciting future developments:

- Higher Resolution Support: Scaling to 4K and beyond

- Real-time Processing: Optimizing for live video colorization

- Multi-cultural Training: Expanding ethnic diversity in training data

- Interactive Colorization: User-guided color selection and refinement

Community Contributions

With 40 stars and 15 forks on GitHub, this project demonstrates strong community interest. The open-source nature encourages:

- Algorithm improvements and optimizations

- Dataset expansion and diversification

- Novel applications and use cases

- Ethical AI development practices

Conclusion

The Auto-Colorization of Grayscale Images project represents a significant leap forward in AI-powered image processing. By combining sophisticated CNN architecture with ethnicity-aware capabilities, it addresses both technical excellence and social responsibility.

The quantitative results speak for themselves – achieving 25.16 dB PSNR, 0.9388 SSIM, and 0.1422 LPIPS while outperforming established benchmarks in key areas. The natural, realistic colorization that respects cultural diversity while maintaining artistic integrity makes this a standout contribution to the field.

Key Takeaways:

- CNNs can effectively learn complex color relationships from grayscale inputs, achieving superior PSNR performance

- Ethnicity-aware processing enhances both accuracy and cultural sensitivity across diverse populations

- Competitive performance against state-of-the-art models demonstrates the viability of the approach

- The technology has transformative potential across multiple industries, from historical preservation to entertainment

Ready to explore the colorful world of AI-powered image processing? Dive into the code, experiment with the models, and contribute to this exciting field that's literally adding color to our digital world!

Explore the Project: GitHub Repository

Want to learn more about machine learning and computer vision? Check out our other articles on deep learning architectures and AI applications in creative industries.